Deepfakes and the History of Faked Photography

9 January 2023

Looking back at the panicked discussion of deepfakes in 2018, actually existing deepfake technology has been something of a disappointment. The r/deepfakeSFW subreddit is full of hobbyists face swapping cult films. The most common genre of deepfake is the face-swapped pornographic video. These videos clearly portray a message about the fungibility of women, but they aren’t convincing anyone that actresses have been filmed having sex. Concerns about deepfakes of politicians have been a damp squib. Presidents Zelensky and Putin have each been targets of deepfake videos, but the videos are so janky that it’s hard to believe they were a serious attempt at misinformation. The most convincing videos—such as the Tom Cruise videos produced by Metaphysic with Miles Fisher—rely heavily on low-tech techniques of impersonation, and take skill and resources to produce. At the time of writing, the highest-profile deepfake video is not futuristic political propaganda, but an entry to America’s Got Talent (came fourth, beaten by an all-female Lebanese dance troupe).

In the majority of discussions the primary source of evidence about the probable effects of deepfake videos has been hypothetical scenarios in which very realistic deepfakes are widespread. Perhaps the clearest version of this hypothetical threat is articulated by Regina Rini. She argues that video evidence is socially important because videos provide us with a source of knowledge which is independent of testimony. When we form beliefs based on what is depicted in a video, we do not need to rely on the videographer herself (she might not have noticed what we see) or make inferences based on our background beliefs about the reliability of the technology. For the viewer it feels like the video camera acts as something like an extension of their own eyes. In Rini’s view, the independence of video evidence from testimony is important because it enables video evidence to act as a backstop for testimony, allowing us to double check testimony against the video record, and passively regulate testimony with the threat of being found out. Her concern is that realistic deepfakes would undermine this general faith in video evidence, forcing us back to a kind of testimonial reliance on individual videographers, which would undermine the backstop role of video evidence.

Hypothetical cases are a valuable tool in philosophical inquiry, but it is curious that discussions of deepfakes haven’t drawn on the history of photography, which reveals a rich tradition of photographic manipulation.

In the 1920s and 30s, the New York Evening Graphic was notorious for presenting composite photographs (known as composographs) as genuine. These photographs were often used to illustrate key moments in scandals, and used staged backgrounds, actors, and stock photos of the dramatis personae to produce photographic collages. Composographs frequently featured sexualised pictures of women, leading the newspaper to gain the nickname the Pornographic.

The Graphic’s composographs are a clear precedent for pornographic deepfakes. The Graphic wasn’t a reliable source of news, but its depictions of women were still important in communicating misogynist representations.

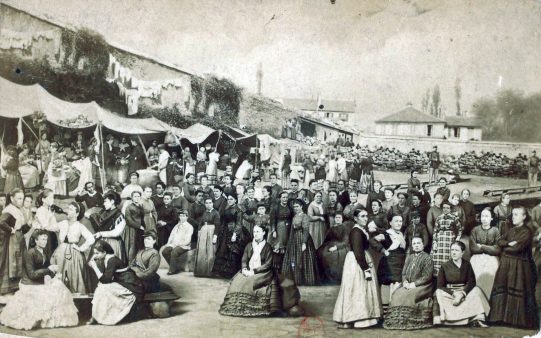

In the 1870s, the portrait photographer Eugène Appert produced a series of images called Les Crimes de la Commun, illustrating various apparent atrocities carried out in the Paris Commune. These images were widely sold alongside portraits of communards, and seem to have convinced a large portion of the French public. Like the Graphic’s composographs, Appert’s series was based on trickery: he took pictures of key locations, took posed pictures of actors, before cut-and-pasting pictures of the heads into the picture (some of which he had in stock from his photography business, others of which were taken in prison). He finished the pictures by painting in dramatic flourishes: smoke, blood, and shadows.

Appert’s Crimes series highlight the basic continuity in photographic manipulation techniques. His recipe—stage photograph, swap photos, manipulate to add colour—is effectively the static analogue of the techniques used by the producers of face-swapped deepfakes.

Until the 1880s it wasn’t possible to print photographs in newspapers, so editors employed engravers to copy pictures. Inevitably many of these drawings were embellished, and when the introduction of half-tone printing allowed the reproduction of photographs, journalists kept up the practice of faking. In 1884 the engraver Stephen Horgan wrote in Photographic News: “Very rarely will a subject be photographed with the composition, arrangement, light and share, of a quality possessing sufficient ‘spirit’ for publication in facsimile. All photographs are altered to a greater or lesser degree before presentation in the newspaper.” In 1898, an editor of a photography magazine summed things up more pithily: “everybody fakes”.

The situation in illustrated newspapers in the US through the 1880s 1890s is perhaps our best historical model for for the dystopian scenario sketched out by researchers who are concerned about deepfake videos. If the proponents of concerns about deepfakes are right, then the prevalence of manipulated photographs in newspapers should have led the American reading public to adopt a generally sceptical attitude about newspaper photographs, fundamentally transforming their trust in photographic technology into a form of quasi-testimonial reliance on individual photographers.

The historian and journalist Andie Tucher argues that the actual consequence of these photographs was that photographers engaged in an internal debate about the proper norms of photographic practice (drawing on previous debates about faking in written journalism), leading to both the establishment of social norms around manipulation, and the development of a professional identity which would later be called photojournalism.

The fact that this solution was a change in social norms highlights an important fact about our reliance on recording technology. When we rely on a piece of technology, we are not just relying on a piece of machinery. We are also implicitly relying on a group of people—designers, operators, and maintainers—whose work is required for the machine to work properly. Drawing on Sandy Goldberg’s work on the epistemology of instruments, we might think of this as a kind of diffuse epistemic dependence: when we rely on someone’s testimony we are relying on a particular person, whereas when we rely on a piece of technology, we are relying on the social practices surrounding it which allow it to work as it should.

If we think that the knowledge we gain from photographs and videos involves diffuse reliance, the existence of deepfakes looks less like a fundamental threat to our reliance on technology, and more like evidence that there is less than perfect compliance with the appropriate social norms of operating recording technology. This is bad news, but it doesn’t raise general sceptical concerns or transform the kind of knowledge we can gain from the technology. What we are facing is not an unprecedented historical threat, but the latest episode in an ongoing debate about the proper place of manipulation in recording techniques.

This reframing of deepfakes as a problem within the social practices surrounding video technology helps to explain not only why deepfakes have not led to the epistemic apocalypse which seemed imminent in 2018, but also points us towards a different set of interventions. Rather than investing our hopes in new software for detecting deepfakes, we might be thinking about how to establish social norms against the production of highly realistic deepfakes, borrowing some of the opprobrium which was attached to ‘faking’ in photojournalism in the twentieth century. Rather than touting deepfakes as an exciting new technique in video production, we might be thinking about how to undermine the body of practical knowledge required to produce realistic deepfakes. Rather than focusing on deepfakes of politicians, we might be focusing on the use of deepfakes against communities which are already the targets of ignorance-producing social systems, and are less protected by social norms around misinformation. Once we recognise the connection between technology and social practices, there is no such thing as a purely technological problem, and the history of photography shows us that producing accurate recordings is absolutely a social problem.

— Cette image provient de la Bibliothèque en ligne Gallica sous l’identifiant ARK btv1b84329293/f127 photomontage montrant une representation de la prison des chantiers a versaill 1602819 (cropped).jpg

“The essay is part of a project that has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement no. 818633)”.

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017