What’s to ban: social media policies on hate speech and reclamation

24 August 2020

Social media policies on hate speech are very controversial and have recently ignited a lively debate.[1] For one thing, social media have both the right and the responsibility to police the shared contents and comments, or the platform risks to be used to promote hate, violence, and the like. Such policies not only concern the content of what we communicate, but also the language we use. The idea behind this is, roughly, that words matter and that our language not only reflects our values but is also able to spread them. Psychology provides interesting insights here. We know for instance that hate speech and discriminatory language have negative effects not only on their direct targets but also on bystanders: short-term effects such as discomfort and anger and long-term ones, such as fear, low self-esteem, stress, drastic behavioral changes and depression (Fasoli 2011, Swim et al. 2001, 2003).

However, slurs – just like many other negative terms – can be reclaimed. They can be used positively in the face of oppression: under special circumstances, they can be employed to convey solidarity, intimacy, camaraderie and so on. Philosophers and psychologists have been looking at this phenomenon and underlined the potential benefits of reclamation (Brontesema 2004, Kennedy 2002): empirical research for example found that when people self-apply a slur in a reclaimed way, they not only feel more powerful but also appear more powerful to bystanders. Moreover, the self-application of slurs can diminish the perceived offensiveness of the word at stake. Overall, when a slur gets reclaimed, subjects feel empowered and the term loses its potential to hurt and derogate (Galinsky et al. 2003, Galinsky et al. 2013).

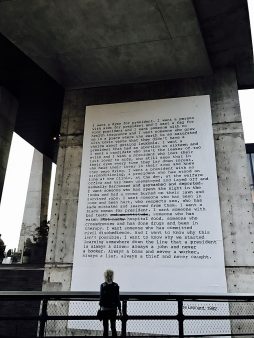

Social media platforms play an important role in spreading these new uses of language and setting new trends, in fostering this sense of solidarity and creating supporting communities all over the world. However, those in charge of enforcing hate speech policies may have a hard time in distinguishing derogatory uses of slurs and reclaimed ones, especially if instead of human beings companies try to rely on algorithms. Social networks sometimes ban reclaimed uses of slurs and this risks to become the norm in the future policies. Just to mention a couple of well-known cases: in 2017 Facebook[2] censored the post where the lawyer Brooke Oliver told about her victory in the trail for Dykes on Bikes to reclaim the slur in a trademark, while in 2018 Instagram[3] removed a post by an account dedicated to sharing pieces of queer history which featured the famous 1992 poem by the activist Zoe Leonard ‘I want a president’ for failing to comply with hate speech policies. Examples abound. In many cases, not only the posts are removed, but the users are banned from the platform for a certain amount of time.

To ban reclaimed uses of slurs (and reclaiming users) can be hurtful in at least three ways. First, these measures end up marginalize marginalized people more than anyone else: they result in censoring and literally excluding from the social network those who would rely on these platforms for support.[4] Second, these policies make it harder to tell and uncover the history of fights for social equality, and to express one’s personal narrative and experience:[5] forbidding to reclaim a slur is forbidding a way (an important one) to mock the bigots, dissociate from them and raise awareness. Third, reclamation can do its trick – i.e. turning a harmful word into a non-harmful one – only if it becomes mainstream, if people get on board.[6] Reclamation needs to gain visibility to be effective on the long run and social media (together with music, tv shows, plays, films) play a crucial role in this, especially since online communication is so fast and wide-reach. All in all, censoring reclaimed uses of slurs on social networks has the paradoxical effect of taking away a means of resistance from those who most need it.

There is a great deal of judgement calls when it comes to hate speech, so it is of the highest importance that such calls take as many factors as possible into account. Hate speech policies are the result of a trade-off between the need to protect freedom of speech and the need to guarantee a safe space: such a negotiation has to consider who are the ones that a certain policy harms. Simplifications such as ‘this term is just prohibited’ surely make hard calls easier and save companies time and money. But over-simplifications can contribute to the same marginalization they are supposedly meant to fight. The fine-grained contextual judgement that hate speech seems to require is currently very costly, as it involves a good deal of employers working on an enormous number of posts and comments, in all the languages used in each platform. Should this cost be paid by social media companies? It does, if having a reasonable hate speech policy that gets applied in a fair way is a crucial requirement for a social network rather than just a plus. Surely, it can be hard to detect reclamation and tell apart standard and reclaimed uses of slurs, but this does not make it OK to condemn acts of reclamation or to ban reclaimers from social networks.

Our language activity is clearly very hard to reduce to an algorithm and the reclamation of slurs is just one among many instances of this trait: our language use is creative, productive, subversive and to some extent unpredictable. But let’s acknowledge this and come up with creative solutions, rather than just censor legitimate acts of protest.

References

Brontsema, Robin. 2004. “A queer revolution: reconceptualizing the debate over linguistic reclamation.” Colorado Research in Linguistics 17: 1-17.

Fasoli, Fabio. 2011. “On the effects of derogatory group labels: The impact of homophobic epithets and sexist slurs on dehumanization, attitude and behavior toward homosexuals and women.” PhD diss., University of Trento.

Galinsky, Adam D., Kurt Hugenberg, Carl Groom, and Galen V. Bodenhausen. 2003. “The reappropriation of stigmatizing labels: Implications for social identity.” In Identity Issues in Groups, edited by Polzer, Jeffrey, 221-56. Bingley: Emerald Group Publishing Limited.

Galinsky, Adam D., Cinthya S. Wang, Jennifer A. Whitson, Eric M. Anicich, Kurt Hugenberg, and Galen V. Bodenhausen. 2013. “The reappropriation of stigmatizing labels: The reciprocal relationship between power and self-labeling.” Psychological Science 24: 2020-29.

Kennedy, Randall (2002), “Nigger.” The Strange Career of a Troublesome Word. New York: Vintage.

Swim, Janet K., Lauri L. Hyers, Laure L. Cohen, and Melissa J. Ferguson. 2001. “Everyday sexism: Evidence for its incidence, nature, and psychological impact from three daily diary studies.” Journal of Social Issues 57: 31-53.

Swim, Janet K., Lauri L. Hyers, Laure L. Cohen, Davita C. Fitzgerald, and Wayne H. Bylsma. 2003. “African American College Students’ Experiences With Everyday Racism: Characteristics of and Responses to These Incidents.” Journal of Black Psychology 29, no. 1: 38-67.

[1] See for instance: https://www.thefire.org/whats-caught-in-the-wide-net-cast-by-hate-speech-policies/; https://www.wired.com/story/how-facebook-checks-facts-and-polices-hate-speech/; https://about.fb.com/news/2017/06/hard-questions-hate-speech/; https://edri.org/the-digital-rights-lgbtq-technology-reinforces-societal-oppressions/.

[2] https://www.thefire.org/whats-caught-in-the-wide-net-cast-by-hate-speech-policies/.

[3] https://www.intomore.com/culture/instagram-is-censoring-lesbian-content-for-violating-community-guidelines.

[4] https://www.wired.com/story/facebooks-hate-speech-policies-censor-marginalized-users/.

[5] https://medium.com/@thedididelgado/mark-zuckerberg-hates-black-people-ae65426e3d2a.

[6] https://www.good.is/articles/take-it-back-5-steps-to-reclaim-a-dirty-name

Picture: “I want a president” by Zoe Leonard installed at the High Line from: https://commons.wikimedia.org/wiki/File:Highline_art_Zoe_Leonard.jpg

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017