Can Machines Manipulate Us?

22 January 2024

YouTube, Facebook, X (formerly Twitter), Instagram, Pinterest, TikTok, and chatbots like ChatGPT and Bard—all of them are manipulating you. More specifically, their algorithms are manipulating you. Or so we now commonly hear from pundits, activists, and academics. But just what would it mean for a machine, at least one short of a sentient AI, to manipulate us?

One possibility is that when people say things like this, they are just speaking imprecisely. What they actually mean is that certain human individuals or groups (designers and engineers, or the companies they work for) are manipulating us. It is the people behind the algorithms, who design them with the intention of having certain effects on us, who are ultimately the manipulators.

This explanation sounds reasonable enough. But it faces a serious limitation: today’s machine learning algorithms often work in unexpected ways that were never intended by their creators. Here is a simplified hypothetical example. Imagine that an engineer at Instagram builds a machine learning algorithm intended to suggest videos and advertisements that will be of interest to users. The algorithm measures interest by monitoring the amount of time users spend watching a video before scrolling on or exiting the program, and measures interest in advertisements by monitoring click-through rates. After some training, the algorithm optimises for these metrics by showing US-based users of all stripes extreme right propaganda videos urging insurrection, paired with advertisements for guns. Some users are sympathetic to the videos; others find them repulsive but engrossing. Both groups engage with the videos and advertisements at high rates, whether because they fantasise about insurrection or because they fear it.

It is tempting to think that at least the latter group has been manipulated into watching these videos and, possibly, purchasing a gun. But suppose that the engineer had no idea that their algorithm would have this result—they just thought it would direct people to videos and advertisements of antecedent interest. In that case, it doesn’t seem as though the engineer was trying to manipulate viewers. This seems to be a case where an algorithm manipulates people, but we can’t make this more precise by saying that it is really the engineer (or the company) behind the algorithm who does the manipulating.

To get the problem of algorithmic manipulation more squarely in view, let’s step back and ask: what is manipulation in general? Most philosophers working on the topic think something like: manipulation requires (i) that the manipulator intend (either consciously or unconsciously) to get their target to do something, while (ii) showing insufficient concern about that target as an agent. Many philosophical discussions of manipulation have focused on this notion of ‘showing insufficient concern’. This is undoubtedly a tricky notion, but we can set it aside for now.

The bigger problem, to begin with, is that algorithms don’t seem to have any intentions at all. Nor, in a case like that of our hypothetical Instagram algorithm, do their human creators need to have manipulative intentions for them to end up being manipulative. This suggests that we should take intentions out of the story of manipulation. Perhaps what manipulation requires is for the manipulator to influence someone’s beliefs, emotions, or desires in ways that cause them to fall short of some ideal—for instance, by bypassing or subverting their rational decision-making process. This is just what the hypothetical Instagram algorithm does: rather than inviting viewers to reflect on whether they would like to spend their time viewing right-wing propaganda videos, it simply exposes them to such videos and serves up more as viewers’ strong visceral reactions keep them scrolling. So perhaps this is what we mean when we say that algorithms are manipulating us. It isn’t metaphorical or imprecise talk at all, but rather a straightforward observation about how these algorithms prompt us to form certain desires (such as the desire to watch more incendiary videos, or to purchase a firearm) in a way that bypasses our rational decision-making process.

However, there is a reason why relatively few philosophers have pursued an intention-free account of manipulation: it threatens to classify all sorts of things that don’t seem like manipulation as instances of manipulation. For instance, consider a case where Daniel, now three months sober, has recently left a destructive relationship built on the mutual overconsumption of alcohol. At dinner with friends, he sees someone who looks very similar to his ex-partner. Suddenly, he is flooded with a desire to get drunk and relive the early excitement of his former relationship. This person has directly influenced Daniel’s desires in a way that bypasses his rational decision-making process. But, contrary to what the intention-free approach might suggest, this person hasn’t manipulated Daniel.

So we are left with a quandary. We can try to revise this way of understanding manipulation so that algorithmic manipulation of the kind we have been discussing counts as manipulation but cases like Daniel’s don’t. Or, we may need to face the fact that we don’t really know what we mean when we say that algorithms and machines are manipulating us.

We are optimistic about the first option, and in our paper ‘Manipulative Machines,’ we develop the intention-free view. We keep the idea that a feature or behaviour (for instance, the Instagram algorithm’s recommendation behaviour) only counts as manipulation if it tends to influence the behaviour of others in ways that bypass their rational decision-making process. But we add that for such behaviour to count as manipulation, its presence must also be partly explained by its tendency to have this kind of influence. This, we suggest, is what differentiates the Instagram algorithm from the person whose appearance arouses in Daniel the desire to go on a bender.

Even if our hypothetical engineer never intended for the Instagram algorithm to work this way, part of the explanation of why it shows people extreme right propaganda is that showing people such propaganda tends to get them to watch more such videos than they would have rationally chosen to. In contrast, the reason why the person Daniel sees looks the way they do has absolutely nothing to do with how Daniel is likely to react to them. Algorithms, then, really can manipulate people—and not just in the ways that their designers may intend.

One reason for fixing up an intention-free concept of manipulation is that seemingly-manipulative algorithms are a pressing concern. They pose serious threats to people’s autonomy and well-being in online life. We’d like to know where to place responsibility for manipulation and its harms, and we’d like to prevent at least some of it from happening in the first place. With the intention-free concept of manipulation, we can rightly identify the manipulator as the algorithm itself. This allows us to see, in turn, that it is not the intentions of particular engineers we ought to be concerned with. Rather, it is incumbent on the companies producing the algorithms that structure our online lives to take the appropriate amount of precaution with respect to these potential manipulators before releasing them into the world.

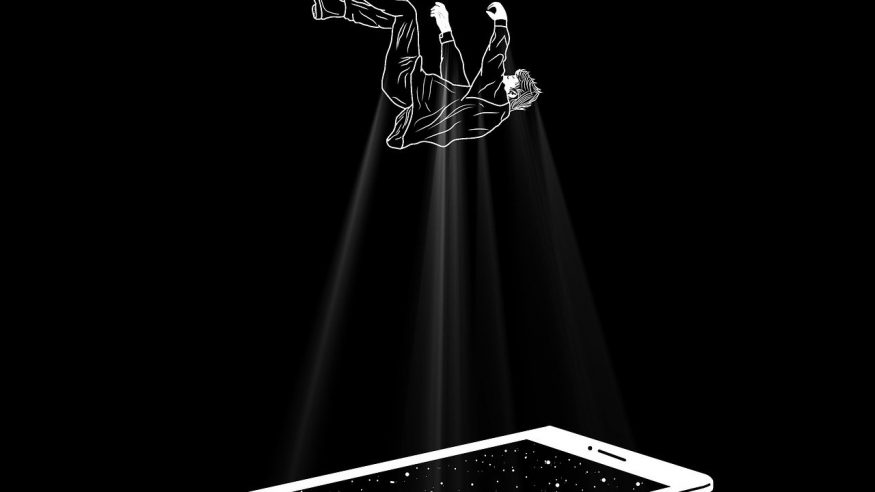

Image by Pheladi Shai from Pixabay

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017